Danica Kragic Jensfelt is developing robotic systems capable of interpreting their surroundings by means resembling human sensory perception, and of learning things by interacting with humans and their environment. The goal is to create robots that can perform tasks in our homes and other complex environments.

Danica Kragic Jensfelt

Professor of Computer Science, specializing in computer vision and robotics

Wallenberg Scholar

Institution:

KTH Royal Institute of Technology

Research field:

Robotic systems capable of interacting with people and their surroundings

A white, meter-high industrial robot stands on a table in Kragic Jensfelt’s lab. It is holding a spoon.

“The robot learns the action of transferring rice from a pan to a plate. It uses a camera as we use our eyes to center the spoon over the bowl,” says Kragic Jensfelt, who is a professor of computer science at KTH Royal Institute of Technology.

Here in the lab she is developing robotic systems capable of interacting with humans in a natural way – learning from us and operating in our environments.

“The aim is to create machines that can perform tasks in dynamic environments, such as our homes.”

Smarter robots with AI

At present most robots perform well-defined, preprogrammed tasks – the same thing time after time, often in factories and other predictable environments. If they are to cope with dynamic environments, in which tasks and conditions can quickly change, they will have to be much more independent. They must be able to gather information from their surroundings, analyze it and change their behavior accordingly.

This is now possible thanks to artificial intelligence – AI. Computer scientists have learnt how robotic systems can develop their own motor skills and build up an understanding of the world around them using large quantities of data and AI technologies such as machine learning and deep learning.

“We can now train robots instead of programming them. This makes it much easier to find solutions for environments in which rapid changes occur. Robots can also find their own solutions that a human being would not think of.”

A key development involves multimodal AI models, capable of handling multiple types of data, such as sound, images and power. This is reminiscent of how we perceive the world around us using our various senses. The models make robots better able to understand and cope with complex situations.

Teaching robots to learn

Kragic Jensfelt grasps the stiff arm of the robot. Learning whereby a human takes hold of the robot and uses their own movements to demonstrate how the task is to be performed is one of the methods being used in the lab. During the movement the robot’s sensors collect data on strength and position, for example, so it can later build models to perform the task itself.

Beside the robot lies a pair of virtual reality glasses – another way of teaching it new tasks. Wearing the glasses, the human can see the robot in a virtual environment and move its arms in a realistic way using hand controls.

“We want robots to be able to learn in a natural way from humans. The aim of my research is to show robots how to perform tasks in our environments.”

Kragic Jensfelt has developed methods enabling robots to learn how to grip objects, including those new to them, based on images showing humans using various objects. She has also created methods so that robots can learn without access to large quantities of data.

It’s great when a robot has learnt from data and by example and performs a task in a way that you hadn’t imagined.

Folding clothes and opening bags

A red robot stands with its arms over a table. In front of it is a piece of cloth. The research team is teaching it to fold washing, which is not as simple as it sounds.

“The first step is to smooth out the cloth. The next question concerns the aim of the folding – should the garment be as small as possible or should creases be avoided? Everyone folds clothes differently, which means the robot must be able to adapt.”

Kragic Jensfelt and her team do not build their own robots; they develop technologies that can be used with robots currently available on the market. They have now reached a point where they are seeking to show potential commercial stakeholders what can be achieved so they can proceed with further development in partnership with them.

“We’re aiming to move from what we can do in the lab to a product that a company can test. We’re keen to work with sectors whose tasks involve interaction with soft objects.”

Potential partners include companies that receive bags of clothes for resale. Kragic Jensfelt envisions that robots could do tasks currently performed by humans: opening bags, checking what they contain, putting clothes on a mannequin and taking photographs. Another example is packing meals into cartons so that people no longer have to work on a conveyor belt putting vegetables or sushi into boxes.

“It’s a challenge to use AI to automate tasks in non-repetitive environments of this kind. In an automobile plant, the appearance of each given component doesn’t vary. But no two stalks of broccoli are identical, and it’s impossible for a robot to know what is in a bag of clothes it opens.”

Home help

Kragic Jensfelt’s ultimate goal is that robots should be able to perform advanced tasks in our homes. She is given further impetus by the fact that her son has a physical disability and needs help at home 24-7.

“I can really see the value of these robotic systems. And it’s also great fun making things that do things!”

Text Sara Nilsson

Translation Maxwell Arding

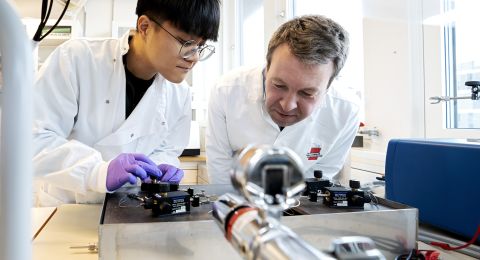

Photo Magnus Bergström